As the demand for high-quality video content continues to skyrocket, driven by streaming platforms, virtual reality, video conferencing, and social media, efficient video coding and compression techniques have become more critical than ever. Traditionally, video compression standards like H.264, HEVC, and AV1 have achieved impressive results, but the increasing volume and resolution of video data—ranging from 4K to 8K and beyond—are pushing these methods to their limits. This is where Artificial Intelligence (AI) is stepping in, offering a transformative approach to video coding and compression that is not only more adaptable but also more efficient.

Understanding Traditional Video Compression

Before exploring AI’s role, it’s essential to understand how traditional video compression works. Standard coding systems rely on mathematical models and fixed algorithms to reduce redundancies in video sequences. Techniques like motion estimation, intra- and inter-frame compression, and entropy coding help to shrink file sizes while attempting to retain quality. Although effective, these methods are engineered with a one-size-fits-all approach that can’t fully adapt to the diverse conditions of real-world video content.

How AI is Changing the Game

AI introduces a paradigm shift in video compression by enabling adaptive, data-driven models that learn to optimize based on content characteristics. Unlike traditional methods, AI-powered approaches can tailor compression strategies dynamically, improving performance across a wider variety of video types and scenarios.

Here are some of the groundbreaking ways in which AI is revolutionizing video coding and compression:

- Content-Aware Compression: AI models can analyze video scenes to identify critical elements—such as faces, text, or motion—and preserve them in higher fidelity, while more redundant areas are compressed aggressively.

- Neural Network-Based Codecs: Deep learning architectures, like autoencoders and generative adversarial networks (GANs), are being used to encode and decode video content with better compression ratios and minimal quality loss.

- Perceptual Quality Optimization: AI-driven codecs can focus on how humans perceive video, prioritizing visual features that matter most to viewers rather than relying purely on mathematical loss measures like PSNR (Peak Signal-to-Noise Ratio).

- Efficient Bitrate Allocation: Machine learning models can predict scenes or frames that need higher bitrates and allocate resources accordingly, improving overall video quality without increasing file size.

Real-World Applications and Use Cases

AI-driven video compression isn’t just a lab experiment—it’s already being deployed in various real-world scenarios to significant effect:

- Streaming Services: Companies like Netflix and YouTube use AI to optimize video delivery, analyzing user device capabilities, network speeds, and even viewer behavior to render the best version of the content with minimal bandwidth usage.

- Video Conferencing: With the rise of remote work, tools like Zoom and Microsoft Teams leverage AI to maintain high-quality video under inconsistent network conditions by dynamically adjusting compression strategies.

- Surveillance Systems: AI helps compress vast hours of surveillance footage without losing critical visual information, such as faces or license plates, allowing for efficient storage and quick retrieval.

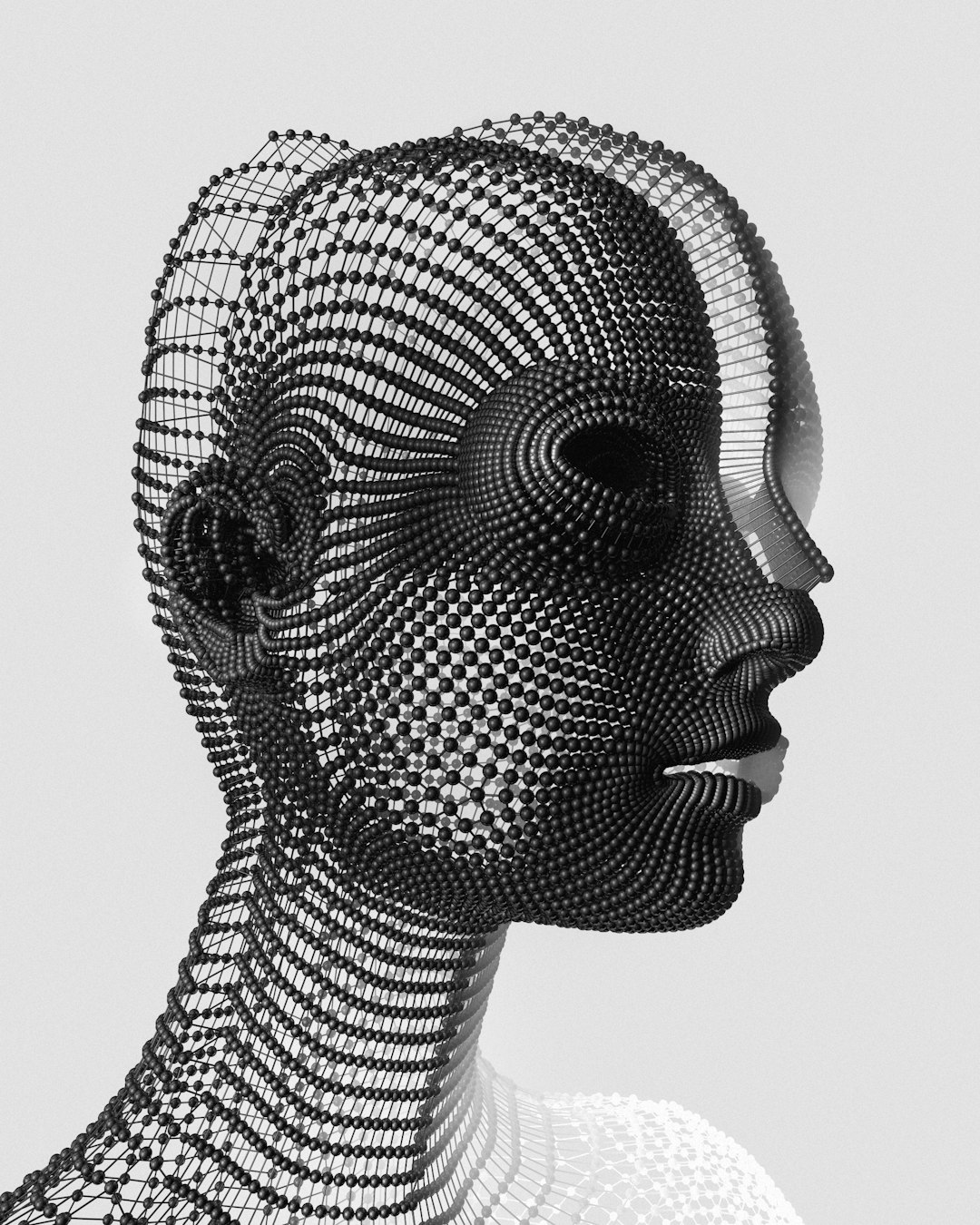

The Role of Deep Learning Models

At the heart of AI-based video compression lies deep learning, a subfield of machine learning inspired by the human brain. Deep neural networks are capable of learning representations of data through multiple layers of abstraction, making them perfect for understanding the complex structure of video content.

Popular deep learning models used in video compression include:

- Autoencoders: These networks learn to compress data into a smaller, encoded format and then reconstruct them again. In video, this means learning the most efficient way to represent each frame or segment.

- Convolutional Neural Networks (CNNs): CNNs are specially designed for image and spatial pattern recognition, making them ideal for extracting features and textures in frames.

- Recurrent Neural Networks (RNNs): Since video is inherently temporal, RNNs—or more advanced versions like Long Short-Term Memory (LSTM) networks—help in understanding time-based changes in scenes, which is crucial for inter-frame compression.

AI vs Traditional Codecs: Performance and Efficiency

While conventional codecs rely heavily on human-engineered rules and heuristics, AI-based systems improve continuously by learning from a vast pool of data. This enables improved performance metrics like:

- Higher Compression Ratios: Studies have shown AI-driven compression algorithms achieving up to 50% lower bitrates compared to traditional codecs like H.264, while maintaining equivalent visual quality.

- Faster Adaptation: AI models can be retrained or fine-tuned to meet specific requirements, such as optimizing for a particular device or network environment.

- Scalability: AI systems handle varied video resolutions—ranging from mobile to cinematic—more gracefully by learning optimal compression patterns across scales.

One standout example is Google’s Lyra, a neural audio codec initially deployed for voice, which is now being expanded into video domains. Similarly, Meta’s “AI Codec Avocado” claims better compression results than current standards by leveraging transformer models to predict and synthesize video frames at lower bitrates.

Challenges and Considerations

Despite its fast advancement, AI-driven video compression is not without challenges:

- Computational Load: Training deep learning models requires significant processing power, usually only accessible via GPUs or high-performance computing clusters.

- Inference Time: Real-time applications like live streaming or gaming pose a challenge, as AI-compressed video must be encoded and decoded in milliseconds to maintain smooth experiences.

- Compatibility: Integration of AI codecs with existing infrastructure, hardware, and platforms is a gradual process that requires standardization and industry collaboration.

Nevertheless, software and hardware acceleration—such as using dedicated AI chips or NPUs (Neural Processing Units)—is quickly bridging the gap, making real-time AI video processing increasingly feasible.

Future Outlook

The trajectory suggests that AI will be at the core of next-generation video codecs. Research is already moving towards hybrid models that combine traditional compression techniques with AI enhancements. New standards such as VVC (Versatile Video Coding) are exploring machine learning augmentation, and open-source initiatives are democratizing access to cutting-edge AI models for video.

Moreover, AI paves the way for fully customized video experiences. Imagine a compression engine that knows your preferences—such as focusing more sharply on character expressions in dramas or optimized action rendering in sports—adapting on the fly without you ever knowing. This level of personalization could redefine the very notion of “quality” in multimedia content.

Conclusion

AI is not just enhancing video compression—it’s redefining it. By leveraging deep learning and neural networks, researchers and developers are creating smarter, faster, and more effective ways to deliver video content across platforms and devices. While there are still hurdles to clear, the fusion of AI with video coding heralds a new era of intelligent, efficient, and user-focused multimedia experiences.

As bandwidth costs rise and demand for high-resolution content grows, AI promises to be the secret weapon that ensures quality and performance don’t just survive—but thrive.