OpenAI Gym is an essential toolkit for developing and comparing reinforcement learning algorithms. Designed with flexibility and ease of use in mind, Gym allows researchers, developers, and enthusiasts to test their AI models in simulated environments before applying them to real-world challenges. Whether someone is a beginner looking to explore artificial intelligence or an expert trying to refine algorithms, OpenAI Gym offers a robust and standardized interface.

What Is OpenAI Gym?

OpenAI Gym is an open-source library specifically designed for building and benchmarking reinforcement learning (RL) models. It was introduced by OpenAI to provide a simple, universal interface through which RL algorithms can interact with various simulated environments. Through Gym, developers can test how their models perform in tasks ranging from simple games to complex robotics simulations.

OpenAI Gym supports environments that include classic control problems, Atari games, board games, and even robotics. It is based on Python and provides a standard way to define environments, observe states, take actions, and receive rewards—making it easier to benchmark and iterate on algorithms.

Why Use OpenAI Gym?

Reinforcement learning requires an agent to make decisions based on feedback from its environment. Implementing such systems from scratch can be complex and time-consuming without the right tools. OpenAI Gym simplifies this process by:

- Providing predefined environments: No need to build testing environments from scratch.

- Offering a consistent API: Interfacing with new environments works the same way regardless of the task complexity.

- Fostering reproducibility: Benchmarks and results can easily be compared with others using the same settings and configurations.

- Enabling rapid prototyping: Test multiple algorithms against multiple environments with minimal setup change.

How Does It Work?

OpenAI Gym employs a typical RL loop that consists of the following components:

- Environment: The world in which the agent operates.

- Agent: The AI or model trying to optimize its interactions.

- Action: A move the agent can make.

- Observation: What the agent perceives from the environment.

- Reward: Feedback signal indicating how good the action was.

Each environment in Gym follows a step() and reset() interface. The agent chooses an action, and the environment responds with the next state, a reward, and a flag indicating whether the game has ended. Here’s a basic example:

import gym

env = gym.make('CartPole-v1')

obs = env.reset()

for _ in range(1000):

action = env.action_space.sample()

obs, reward, done, info = env.step(action)

if done:

obs = env.reset()

Popular Environments in OpenAI Gym

OpenAI Gym offers a wide range of environments, which fall into the following categories:

- Classic Control: Such as CartPole, MountainCar, and Acrobot.

- Atari: Classic Atari 2600 games like Pong and Breakout, requiring raw pixel inputs and complex strategy.

- Box2D: Environments such as LunarLander and BipedalWalker that simulate physics-based control.

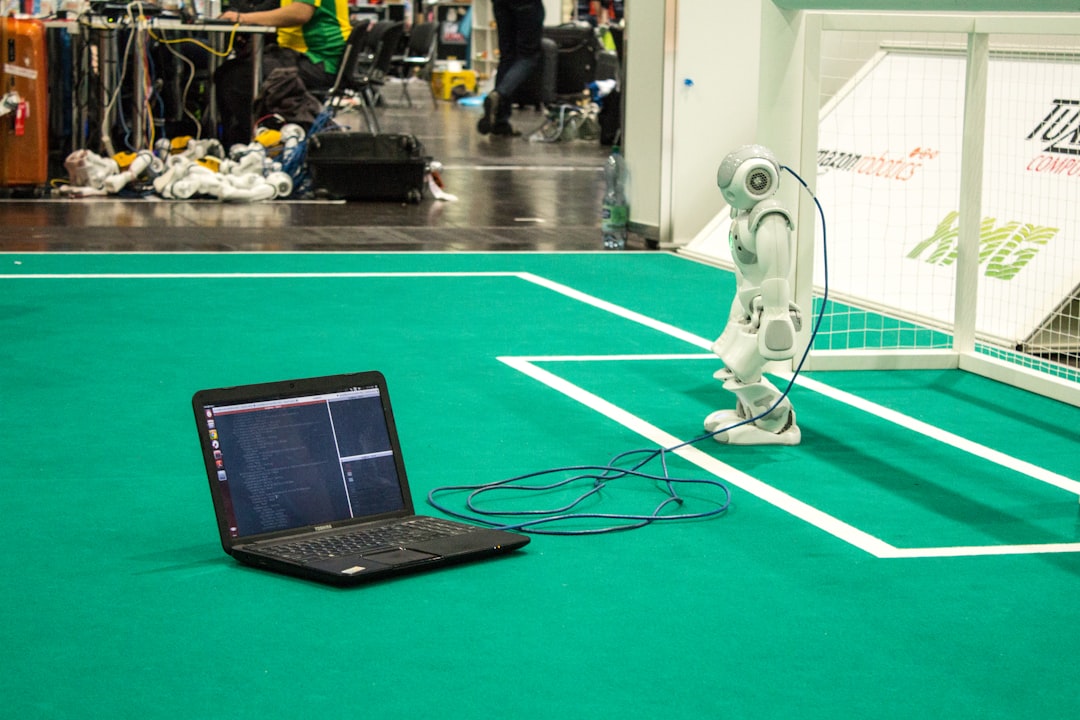

- MuJoCo: High-end robotic simulations (if additional plugins are used).

- Robotics (Fetch, Hand): Simulators for robotic arm manipulation and control.

This diverse collection helps developers explore everything from simple algorithm behaviors to advanced AI that requires memory and strategic planning.

Installation and Setup

Getting started with OpenAI Gym is straightforward. It can be installed using pip:

pip install gymFor more complex environments like Atari or MuJoCo, additional dependencies need to be installed. Documentation for each environment explains these requirements by platform.

Integration with Other Libraries

OpenAI Gym works seamlessly with many RL libraries and frameworks, including:

- Stable Baselines3 – A set of reliable RL implementations in PyTorch.

- RLlib – A scalable RL library built by Ray.

- Keras-RL – Simple RL with TensorFlow and Keras.

- TensorFlow Agents (TF-Agents).

These integrations allow Gym to serve as the benchmarking backbone for a vast number of RL algorithms from DQN to PPO and A3C.

Advantages of Using OpenAI Gym

There are several advantages to adopting OpenAI Gym for reinforcement learning projects:

- Community Support: With a large user base and contributors, it’s easy to find support and tutorials.

- Wide Range of Environments: From basic to complex, all in one ecosystem.

- Ease of Use: Minimal setup and straightforward Python API.

- Extensibility: Users can create custom environments if needed.

Creating Custom Environments

OpenAI Gym isn’t limited to its built-in environments. Developers can design and register new ones easily. By subclassing the gym.Env class, developers define the required methods (reset(), step(), etc.), observation spaces, and action spaces.

This makes OpenAI Gym ideal for research as well as real-world industrial applications, such as automotive simulations, robotics, and smart grids.

Conclusion

OpenAI Gym is a cornerstone in the world of reinforcement learning. From simple academic environments to complex robotic simulations, it allows developers of all levels to experiment and innovate. Its elegant interface, community support, and integration capabilities make it indispensable for anyone looking to enter the realm of AI and reinforcement learning.

Whether you’re a student trying to understand Q-learning or a researcher developing next-generation agents, Gym provides the playground to transform concepts into working models.

Frequently Asked Questions (FAQ)

- What programming language is OpenAI Gym written in?

- OpenAI Gym is written in Python and is best used with other Python-based data science and machine learning libraries.

- Can I use OpenAI Gym without knowing deep learning?

- Yes, many of the simpler environments don’t require deep learning knowledge. You can use basic algorithms like Q-learning or genetic algorithms to get started.

- Is OpenAI Gym suitable for beginners?

- Absolutely. It is designed to help both beginners and experienced developers practice and test reinforcement learning algorithms in a structured way.

- Is OpenAI Gym actively maintained?

- Yes, it is maintained by the OpenAI community and is part of the broader ecosystem that includes Stable Baselines3 and other frameworks.

- How can I visualize the environment?

- OpenAI Gym environments can be rendered using the

env.render()function, which opens a window or image render of the simulation in progress. - Are there multiplayer environments available?

- While OpenAI Gym itself focuses on single-agent environments, it can be extended or used with wrappers to simulate multiplayer or multi-agent tasks.

- Can I contribute to OpenAI Gym?

- Yes. As an open-source project, contributions are welcome. You can submit pull requests, report bugs, or create new environments.