Artificial Intelligence is revolutionizing virtually every aspect of digital media, and nowhere is this more evident than in video encoding workflows. With the growing demand for high-quality streaming content and bandwidth efficiency, content creators and streaming platforms are increasingly turning to AI to streamline the encoding process, reduce storage costs, and maintain superior video quality.

This article will walk you through a step-by-step guide to implementing AI in your video encoding workflows. Whether you’re a content creator, media engineer, or a tech-savvy enthusiast, understanding this process can give you a head start in the evolving world of digital video technology.

1. Understand the Benefits of AI in Video Encoding

Before jumping into implementation, it’s crucial to understand how AI enhances video encoding:

- Improved Compression Efficiency: AI can better predict motion and patterns, reducing bitrate without impacting visual quality.

- Content-Aware Encoding: AI algorithms analyze each frame to apply optimal compression settings based on motion, texture, or scene changes.

- Cost Reduction: Lower bitrates result in significant savings on storage and data transmission.

- Real-Time Adaptation: Advanced AI-powered encoders adjust dynamically to network conditions and user preferences.

By understanding these benefits, you lay the foundation for making meaningful upgrades to your existing workflow.

2. Evaluate Your Current Encoding Infrastructure

You need to start by assessing your current encoding setup. Consider the following:

- Software: Are you relying on open-source tools like FFmpeg or commercial solutions?

- Hardware: Do you have GPUs or accelerators that can support machine learning inference?

- Workflow: How are your encoding jobs triggered, and what file formats and resolutions do you typically handle?

This evaluation will help you identify integration points and determine if you need to upgrade your hardware or software environment.

3. Choose an AI-Powered Encoding Solution

There are several AI-powered video encoding tools available on the market. Popular ones include:

- NVIDIA DeepStream – optimized for computer vision and real-time streaming pipelines.

- Bitmovin AI encoding – uses machine learning to analyze video files and apply optimal bitrate ladders.

- FFmpeg with AI plug-ins – customizable and open-source, allowing integration of TensorFlow or PyTorch models.

Be sure to choose a solution that aligns with your use case (real-time streaming, video-on-demand, etc.) and budget.

4. Prepare Your Training Data (Optional but Recommended)

If you plan to develop or tweak your own AI models for specific content types, you’ll need a training dataset. Here’s how to prepare:

- Select a variety of sample videos – Include different genres, resolutions, and motion complexity levels.

- Annotate or segment your data – Helpful if building a model to detect scene complexity, motion, or object prominence.

Alternatively, many pre-trained models can offer excellent results out of the box, particularly if you’re looking for general-purpose enhancements.

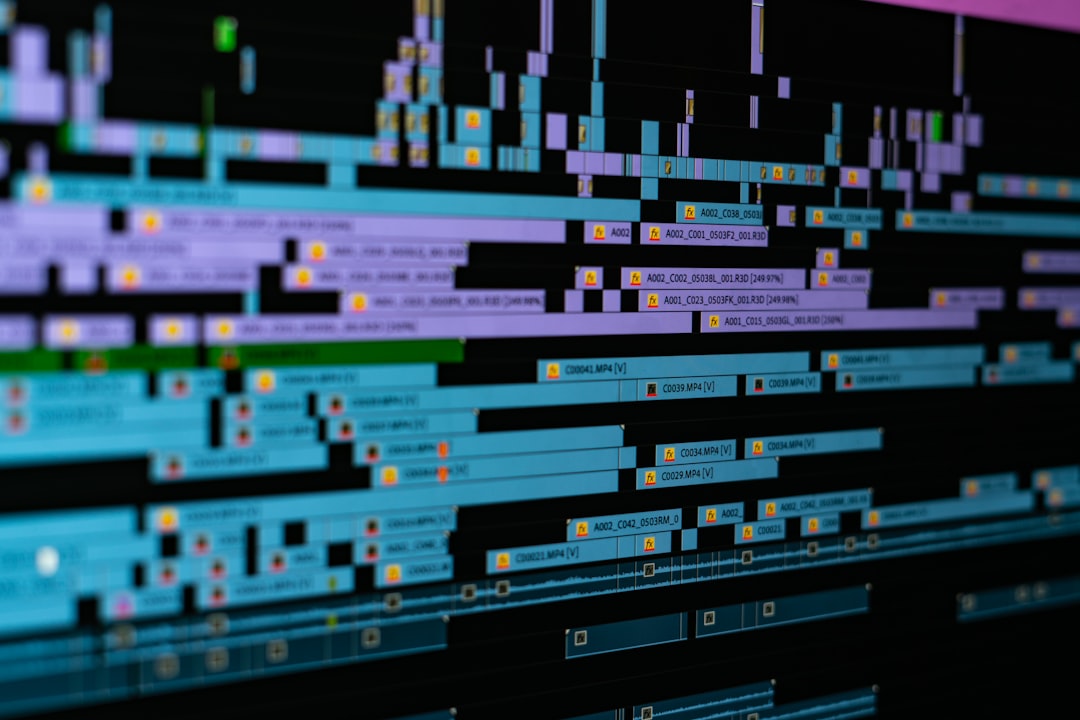

5. Integrate AI into Your Video Pipeline

This is the stage where the real work begins. Here’s a simple way to structure your AI-video workflow:

- Pre-processing: Run input videos through a scene analysis engine using AI to segment and label content.

- Decision Layer: Use AI to choose the optimal encoding strategy for each segment (e.g., higher compression for static scenes).

- Encoding Engine: Apply those decisions via an encoding platform like FFmpeg, using AI-assisted settings or dynamic bitrate ladders.

Some solutions incorporate this seamlessly, whereas others require a custom-built framework. Cloud providers like AWS and Google Cloud also offer APIs to streamline this step.

6. Measure and Optimize Quality

Once your AI-enhanced encoding pipeline is up and running, you need to ensure it delivers the desired output. Important metrics include:

- PSNR (Peak Signal-to-Noise Ratio) – Measures difference between original and encoded files.

- SSIM (Structural Similarity Index) – Compares structural integrity and texture details.

- VMAF (Video Multi-Method Assessment Fusion) – Developed by Netflix, it combines multiple metrics into a video quality score closer to human perception.

AI models can be further fine-tuned by using these metrics as feedback mechanisms to retrain or adjust encoding heuristics.

7. Implement Adaptive Bitrate Streaming (ABR)

Artificial intelligence really shines when paired with ABR, especially in live streaming. Here’s how AI elevates ABR:

- Predictive ABR – Uses machine learning to anticipate network drops or bandwidth changes.

- Optimal Ladder Design – AI tools can create custom bitrate ladders tailored to each piece of content, avoiding wasteful bitrates.

This is particularly useful for OTT (Over-the-Top) platforms aiming to deliver smooth playback with minimal buffering on variable networks.

8. Monitor Performance and Gather Feedback

After deploying your AI-enhanced workflow, continuous performance monitoring is vital. Key aspects include:

- Encoding Time – Has AI positively or negatively impacted processing speed?

- File Size – Measure storage savings across formats and resolutions.

- User Experience – Lower buffering, improved quality on low-bandwidth connections, faster startup time.

Getting viewer feedback and monitoring real-time analytics tools like QoE (Quality of Experience) dashboards can help refine your AI models further.

9. Stay Updated on AI and Codec Evolutions

AI in video encoding is a rapidly evolving field. New codecs such as AV1 and VVC (Versatile Video Coding) are being designed with AI-compression in mind. Additionally, frameworks like TensorRT and ONNX Runtime continually improve model inference speeds, making real-time encoding more feasible.

To remain competitive, participate in communities like the Alliance for Open Media, follow publications on IEEE or Medium, and consider enrolling in courses that cover neural compression techniques.

10. Scale and Automate

Once you’ve validated the AI-enhanced encoding process, it’s time to scale:

- Use container orchestration tools like Kubernetes to scale across multiple nodes.

- Set up job scheduling and load balancing for parallel encoding pipelines.

- Automate model retraining based on feedback loops and periodic reviews.

At scale, AI-powered workflows can provide not only better quality and efficiency, but also strategic insights about viewer habits and content performance.

Final Thoughts

Integrating AI into your video encoding workflow is not just about keeping up with technology—it’s about unlocking new levels of efficiency, quality, and customization. From automated scene analysis to predictive streaming and smart compression, the applications are vast and valuable.

Start small with existing tools, and grow with experience and feedback. As AI continues to evolve, so too will its transformative impact on how we create, compress, and deliver video content.